They started in June 2019 with version 0.2 ( link). They created something called “Delta Lake” on Apache Spark which has all those wonderful features above. Who are Delta? Well here’s their website. We should thank those people at Delta for this wonderful capabilities. Yes, databases and tables! Isn’t wonderful? Your data lakehouse consists of databases and tables. If your data lake is a data lakehouse, you can use SQL language to query the data lake. Not with this special data lake called data lakehouse. So those BI tools will have to open the files and read them as files! Why? Because a data lake is just a collection of files. But when they query a data lake, they can’t use SQL language. Many BI tools use SQL language to query databases (well all BI tools, actually). And other SQL statement such as group by etc. You can query a data lakehouse by simply doing “select * from Table1 where column1 = criteria”. Yes, the good old SQL! The SQL language that we all have been using for 50 years. Oh, I forgot to mention one very important feature! A data lakehouse uses SQL language.

How do we do that in a data lakehouse? By querying the data lakehouse using TIMESTAMP AS OF clause, like this: SELECT * FROM Table1 TIMESTAMP AS OF '' We can use this “time travel” capability for debugging or for auditing. We can replay a machine learning input (or output) as it was before it was changed. We can rerun a report as it was last year. We can query an older snapshot of a table ( link). Meaning that we can query the data as it is today, but we can also query the data as it was at some point in the past.Ī data lakehouse has this capability. One of the key features of a data warehouse is the ability to do “time travel”. Yup, just like a relational database, it uses transaction logs. This means that the data would not be corrupted by system crashes or power failure.Ī data lakehouse can achieve this ACID capability using transaction logs. It has a concept of durability, meaning that data changes would be saved, even when the disk or the server failed. when we have multiple changes either all changes are executed successfully or the none of them is not executed. It is a data lake, but like a database it has the concept of atomicity, consistency, isolation and durability ( ACID). It is not a database.īut a data lakehouse is different. A data lake is just a collection of files. atomicity, consistency, isolation, durability. There is no concept of a transaction, to ensure that multiple data changes must be treated as one unit (transaction, or atomicity). When multiple users are reading and writing from and to the same table all at once, there is no way to ensure that they don’t interfere each other (isolation concept). There is no way to ensure data reliability. When users read the data lake whiles some process is ingesting data into it, there is no way to ensure data consistency. Usually a data lake does not have data integrity. We can directly point the BI tool to the data lakehouse. We don’t need to build a data warehouse/mart. With a data lakehouse, we don’t need to do that. Then we point our BI tool such as Power BI or Tableau to this data warehouse/mart. Usually we read data from a data lake and load it into a data warehouse/mart so that we can have a star schema.

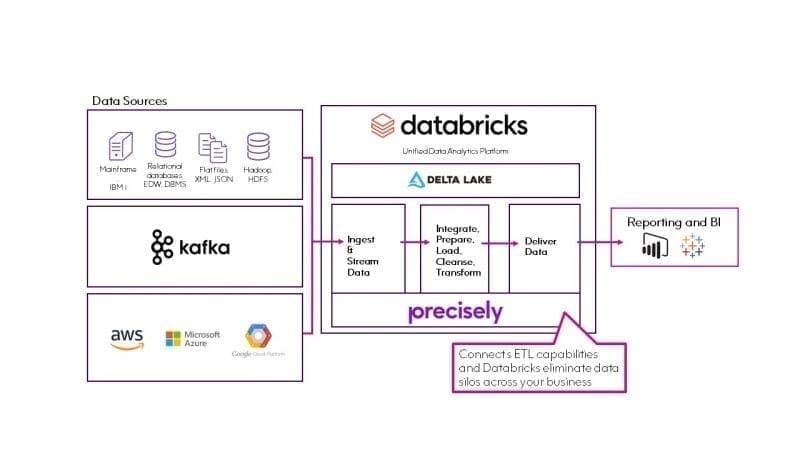

Usually a data lake does not have a schema. For a paper on Data Lakehouse see here: link. Data Lakehouse is a data lake which has data warehouse features such as star schema, transaction, data integrity, BI tools, data governance, scalability, data ingestion from various sources, and can do “time travel” ( link, link).

0 kommentar(er)

0 kommentar(er)